Submitted By: admin on June 17, 2024

0

4219

Source: www.reddit.com

Source: www.reddit.com

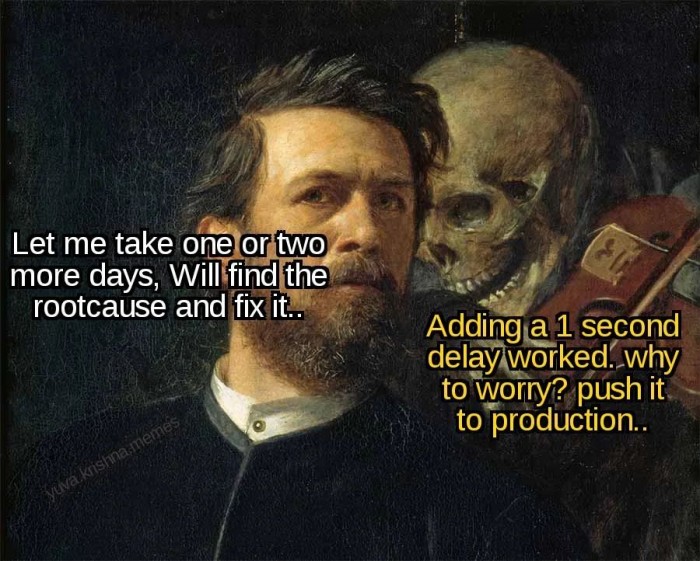

You can either take one or two days to find the root cause and fix it or add 1 second delay and push it to production. The questions is.... will you live peacefully with this skeleton hidden in production knowing that one day someone might find it and question your skills?