Submitted By: admin on February 27, 2021

0

43920

Source: www.reddit.com

Source: www.reddit.com

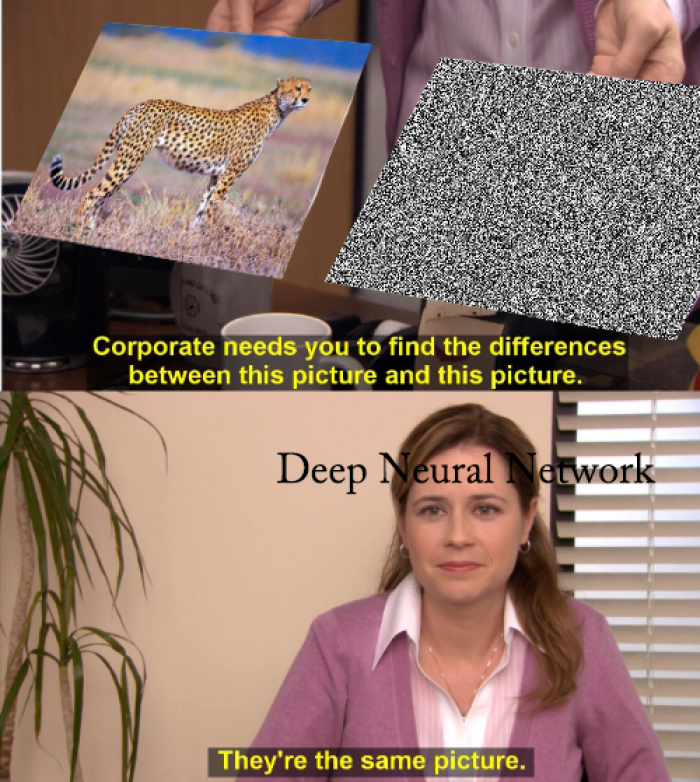

I study a PhD in Security within Machine Learning and this is actually an extremely dangerous thing with nearly all DNN models due to how they 'see' data and is used within many ML attacks. DNN's don't see the world as we do (Obviously) but more importantly that means images or data can appear exactly the same to us, but to a DNN be completely different.

You can imagine a scenario where a DNN within a autonomous car can be easily tricked to misclassify road signs. To us, a readable STOP sign with always say STOP, even if it has scratches, and dirt on the sign, we can easily interpret what the sign should be telling us. However an attacker can use noise (Similar to the photo of another road sign) to alter the image in tiny ways to cause a DNN to think a STOP sign is actually just a speed limit sign, while to us it still looks exactly like a STOP sign. Deploy such an attack on a self driving car at a junction with a stop sign and you can imagine how the car would simply drive on rather than stopping. You'll be surprised how easy it is to trick AI, even big companies like YouTube's have issues with this within copyright music detection if you perform complex ML attacks upon the music.

Here's a paper similar to the scenario I described but by placing stickers in specific places to make an AI not see stop signs; https://arxiv.org/pdf/1707.08945.pdf

- _Waldy_